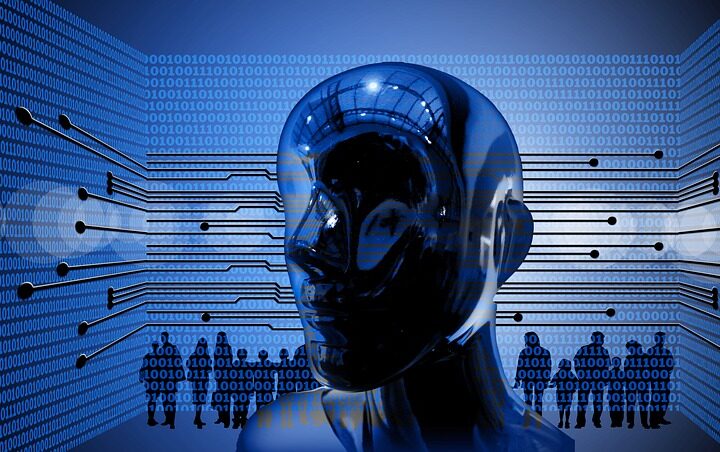

It may seem outlandish now, but in the not-so-distant future, we may be able to call on voice assistants like Alexa and Siri to help detect dementia and early cognitive impairment.

Researchers at Dartmouth-Hitchcock and the University of Massachusetts Boston have received a 4-year, $1.1 million grant from the National Institute on Aging (NIA) to study whether voice and language patterns captured by voice assistants like Alexa, Siri or Google Home could help identify early-stage dementia or cognitive impairment.

“We are tackling a significant and complicated data-science question: whether the collection of long-term speech patterns of individuals at home will enable us to develop new speech-analysis methods for early detection of this challenging disease,” Xiaohui Liang, an assistant professor of computer science at the University of Massachusetts Boston, said in a news release.

“Our team envisions that the changes in the speech patterns of individuals using the voice assistant systems may be sensitive to their decline in memory and function over time,” Liang said in a news release.

As part of the new grant, researchers will use machine and deep learning techniques, and collect data on patient participants in the coming months. Past research has shown the memory loss isn’t the only symptom in dementia, and speech loss can also be a symptom.

The researchers said that early diagnosis of Alzheimer’s disease and related dementias in older adults living alone is essential for developing, planning and ensuring adequate support at home for patients and their families. The new grant seeks to develop what could become a low-cost and practical home-based assessment method using voice assistant systems for early detection of cognitive decline.

“Alzheimer’s disease and related dementias are a major public health concern that lead to high health costs, risk of nursing home placement, and place an inordinate burden on the whole family,” said John Batsis, a geriatrician and an associate professor of medicine at the Geisel School of Medicine at Dartmouth, in the news release. “The ability to plan in the early stages of the disease is essential for initiating interventions and providing support systems to improve patients’ everyday function and quality of life.”

The grant involves an 18-month laboratory evaluation and a 28-month home evaluation with a focus on whether the voice assistant systems can measure and predict an individual’s decline in the home participants over time.

In an interview with Being Patient, Batsis said that ideally, if their study was successful, Alexas or Google Homes could become home-based tools to help identify speech patterns associated with dementia.

“Ultimately we want to develop a system that can subsequently be placed into people’s home, almost like enabling an Alexa skill,” Batsis said. “We could enable a skill that could be used for home-based tracking for patients, and their caregivers—who may have some concerns about their loved ones’ cognitive health—would be able to monitor that.”

In an interview with the Associated Press, Batsis acknowledged that there were many challenges ahead in developing a system that people would feel comfortable using in their homes, and which would be able to make sense of a large number of languages or when someone doesn’t speak clearly.

“These are all practical and pragmatic problems,” Batsis said.

He also told Being Patient that the goal of using voice assistants for diagnosis wouldn’t be to replace current clinical evaluations like memory tests, but rather to push for sooner detection.

“It’s really about early planning, not to replace evaluation,” Batsis said. “One of the biggest challenges in dementia is late diagnosis, late presentation to the clinician. Our goal is to bring that timeline forward a bit, to give families opportunities to plan and think ahead of all the issues while the patient is still able to make decisions, and that’s really key.”