UCLA dementia researcher Mayank Mehta is using VR in mouse studies to explore how to translate Alzheimer’s mouse study results to human clinical trials.

There are dozens of new approaches to treating Alzheimer’s currently in clinical trials. But while some promising innovations in diagnostics and intervention are progressing faster than they ever have before, Alzheimer’s drugs still have a 99-percent failure rate once they reach human clinical trials. UCLA professor and neurophysics researcher Mayank Mehta is exploring a way to make trials more effective. Virtual Reality, or VR, an emerging technology known for its gaming capabilities, is being increasingly applied in various ways in dementia research and therapy, from helping Alzheimer’s patients recall old memories, to enabling caregivers to understand more about the first-hand experience of living with dementia. Now, Mehta is leveraging VR to try to improve Alzheimer’s drug testing processes.

As the director of the Center for Physics of Life and the Keck Center for Neurophysics, Mehta’s research focuses on the biophysical mechanisms by which the brain evolves, creates, learns, and forms memories. Mehta’s laboratory has developed a new VR environment that can test rodents in similar conditions as humans that he uses in other studies on learning.

Mehta knows that VR can help map the way the brain understands space and time — and how learning that could help us unlock secrets to better brain health. Because Alzheimer’s treatments that work for mice don’t usually end up being effective in humans, his lab uses the VR learning environment to get more accurate insights into cognitive function and Alzheimer’s research. In the below conversation, he joins Being Patient EIC Deborah Kan to discuss some of his discoveries about the brain regarding VR and talk through some of his latest research.

Watch the conversation or read a transcript of the interview’s highlights below.

Being Patient: We know that a lot of technology is trying to figure out things we don’t know about the brain. Specifically, what are you looking to discover in your work at UCLA?

Mayank Mehta: I’m a professor in the Department of Physics, I did a Ph.D. in theoretical physics, how the universe began, the structure of space-time, and very mathematical stuff. Now, I’m a professor not only in physics but also in the Department of Neurology, as well as in the Department of Electrical and Computer Engineering. So, why would I be studying the brain? Because physics, neuro, and electrical engineering doesn’t seem to have anything to do with the brain. The short answer is that a long time ago, people discovered that several of us that may have dementia, or Alzheimer’s, or even younger children, which may have memory disorders or learning disabilities; it’s not as if their eyes are not working, they can see quite well, most of them, some of them have eye problems that are separate, they can hear quite well— but somehow, something goes wrong, where they’re not able to learn.

Remember, many of them can walk, they can talk, they can eat, they can take care of themselves, unless at the very late stage of Alzheimer’s, which we are going to talk about later. Otherwise, there are a large number of human beings, which seem to be able to hear, see, walk, eat, and do everything well, but there is this weird thing called forming a concept. For example, if there’s an Alzheimer’s patient, you can ask that person, especially the earliest ages, when they’re still talking, “What did you have for lunch?” and a patient will say, “I don’t know. Beans and potatoes.”

The Alzheimer’s patient [in later stages] might struggle. Clearly, the patient who ate the food could recognize it as beans and potatoes but somehow didn’t remember. So this is called episodic memory, there was a specific episode that happened, and that memory is somehow missing. It’s not like the sounds are missing, the lights or missing, or the ability to recognize is missing. Somehow, these sets of things, such as the smell of potatoes, the color of the beans, the taste I had, and the movement I made, all that complicated things put together, didn’t work together. I got interested in that because of the amazing work done by a large number of scientists. Neuroscientists have figured out how a part of the brain, for example, responds to sight.

Being Patient: What part of the brain is that?

Mehta: The primary visual cortex, in the back, and that was the Nobel Prize-winning discovery, to say that part really cares for vision. Here the frontal cortex cares for decision-making. On the side, you have the homunculus, you have the motor cortex, you have the auditory cortex, and so on. We know that quite well. Each of these parts of the brain is taking care of one thing. We know that quite well, and the question is, who is putting it all together?

We started with the hypothesis that maybe what goes wrong with so many learning disorders, including a child that’s sitting in a school: they can see the blackboard or the whiteboard, they can hear sounds, but they’re not able to fit things together. So, maybe that’s a problem. How does the brain do that?

Then the next level of the question was at the end of the day when I had an experience of beans and potatoes, I had a certain set of experiences, each of them very specific things, such as taste. At a given moment, I cut the potato with the knife. I don’t remember all that, I have extracted out a concept called lunch of beans and potatoes. That’s a very sophisticated thing. It’s not about perceiving the beans or potatoes, it’s about putting those things together, abstracting them, and getting the gist of it to say that it was lunch. How does the brain create such abstract ideas?

Being Patient: What has virtual reality enabled you to learn about this process?

Mehta: Right. So in order to do that, I want to first tell you a story. And this story is a very simple one. And it goes to the heart of the bigger problem that we are here to discuss, which is that human beings have been studying the brain for a long time, the human brain, the mouse brain, the rat brain, and so on. And they have not been able to cure Alzheimer’s. Why is that?

One possibility is that there is some unknown mystery about the brain that we don’t know. And that’s why we haven’t cured Alzheimer’s. But let me tell you a secret. Alzheimer’s has actually been cured. There are at least 20 drugs that have cured Alzheimer’s in mice. Twenty drugs that cure Alzheimer’s in mice failed in humans.

Being Patient: Most mouse studies do not translate into humans, right?

Mehta: In Alzheimer’s. It’s not true for Tylenol. That’s not true for diabetes drugs. That’s not true for heart attack drugs, lots of drugs. We test all the drugs in mice because, unfortunately, to measure whether a drug is doing the right thing or not, we need to do some invasive measurement of the heart or the brain, which we can’t do with normal humans, it causes damage. So, we want to test, for safety reasons, the effectiveness of therapy for certain animals so that it is working and doesn’t cause side effects. Then we want to test it in humans. The weird thing is that Alzheimer’s has been cured in mice by 20 drugs, not one or two. All of them failed. It’s such a big problem that major pharmaceutical companies like Pfizer have stopped doing research on Alzheimer’s because they lost billions of dollars. The translation didn’t work. In my mind, there is one unique problem with learning and memory diseases, especially Alzheimer’s, which is that drugs work in mice, they don’t work in humans, and we can’t directly go to humans. Heaven forbid, there is a nasty side effect, and other patients suffer even more.

“The main challenge has been how

to translate drugs from mice to humans.”

The main challenge has been how to translate drugs from mice to humans. Now that immediately poses a question to you. How do I ask a mouse, “Hello, Mr. Mouse. What did you have for lunch today?” I can’t. I can test if the mouse can see something or not and can hear something or not. I can test rather easily if it startles the mouse or something. How do I test episodic memory in a mouse? Now, I want to start with an ancient story which we humans forget. The Zen master and his students were walking by the river, and the Zen master said, “Look how happy the fish are in the pond because they’re thrilled to watch the birds fly by.” A student says, “ Since you’re not a fish, how do you know what makes fish happy?”

Valid question. Do you see the relevance of what we are talking about today? How do I know if the drug is curing a mouse?

It’s an ancient problem that we are dealing with. If we want to test drugs in animals, especially drugs that require a cognitive report, how are we going to do that? How do I test memory?

Here is your eye; let’s say thinking memories only through eyes, then we are not worrying about ears, touching, and so on. That information from the eye goes to all these complicated circuits, this is the back of the brain, the primary visual cortex. Then there are a huge number of stations in between. This is an old picture, there are many more stations in between, and each one does something funky and all the way at the other end is the circuit called hippocampus, that’s at the opposite end. This thing, many of you, the listeners who are aware of artificial intelligence, looks like a deep network. This is the input layer, and there are a whole bunch of intermediate processing layers. This is the output layer in low and behold, the output layer does abstraction, generalization, memory, and so on. Sounds like it’s a really funny thing, and that’s called the hippocampus. That’s where Alzheimer’s begins.

The hippocampus not only is the place where Alzheimer’s begins, but it’s also the place where there is autism, Alzheimer’s, disease-related dementia, depression, PTSD, schizophrenia, psychosis, epilepsy, and much more.

Why is it then that that tiny structure has all these diseases? If you take any other random brain structure equally big, it doesn’t have these diseases. None of them are curable, not just Alzheimer’s, so people realize that this is a challenge. How do you ask a mouse to say what was an episode? People thought, well, since we can’t have a conversation, and since Alzheimer’s patients often get lost in space as well, they forget where their home is, and they get down at the wrong stop. They thought maybe we can probe the mouse’s ability to know where it is. Rather than, am I seeing red or am I seeing blue, but “Where am I?”

Being Patient: How is Alzheimer’s drug development currently done with mice?

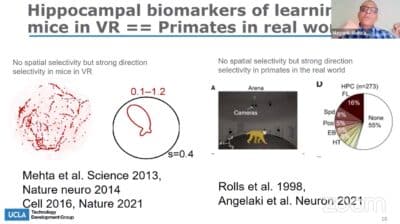

Mehta: When people do Alzheimer’s drug development, how do they test it? They say, “All right, I’m going to develop a drug and then check the mouse’s ability to navigate in a maze, sometimes even measure the neural responses.” Otherwise, they simply measure the mouse’s ability to find its way in the world. Now, the problem is that such spatial selectivity is not found in the human hippocampus.

You can say, well, maybe the human hippocampus is doing something else. When human beings walk around, the neurons actually care for what the human being is looking at, which seems to be natural. But on the other hand, people think the mouse hippocampal neurons don’t care for what the new mouse is looking. So, it’s all kind of going in the opposite direction.

“The hippocampus not only is the place where Alzheimer’s

begins, but it’s also the place where there is autism,

Alzheimer’s, disease-related dementia, depression,

PTSD, schizophrenia, psychosis,

epilepsy, and much more.”

One is going to the left and is going to the right, and the two don’t seem to match 100 percent. That’s when I got involved in virtual reality as the solution.

Being Patient: Why is virtual reality the solution to this?

Mehta: Let’s imagine I’m a human. I’m walking around in this room. As I’m standing right now, my head is about a meter plus from the ground. I don’t know what’s exactly at my feet. I certainly can’t smell my feet, heaven forbid, or anything near my feet. Now, let’s imagine there is a mouse that’s running in the maze. Its head is like this, and its nose is right next to the ground. It has a phenomenal sense of smell. People use mice and rats, we will sniff out landmines half a meter below the ground. The noses right here, they have whiskers. We don’t have whiskers. The whiskers are highly sensitive, they are touching the ground. The eyes are next to the ground, the ears are next to the ground, and the vision is really not as great. Sometimes they are nocturnal, and they don’t see far away.

“Our hypothesis was that the major differences between

mice and humans are because they’re

having totally different experiences.”

Our hypothesis has been that when the mouse explores any space because the mouse is ground-dwelling, its experience is completely different from the human experience of where you are. The human experience of where I am, is largely based on vision, maybe a little bit based on sound. Occasionally, I will use the smell to say, “Oh, yeah, that smells like bacon, or that must be kitchen or something.” But, most sighted human beings navigate using vision. Mice, on the other hand, are constantly experiencing smell, touch, and eyes. Everything is on the ground. Our hypothesis was that the major differences between mice and humans are because they’re having totally different experiences. Even though both of them are in space, their experiences are completely different, and the hippocampus is getting completely different experiences.

The question, if the hypothesis is true, I should be able to give either human being the experience of a mouse, which means an Alzheimer’s patient comes to the clinic, has to get down on all fours, and walk around with a nose to the ground— not really practical, or I have to make the mice walk a meter above the ground. That’s also not practical. So, how do I make mice navigate only using vision? That’s where virtual reality comes in.

Being Patient: How did you make a virtual reality system specifically focus on vision?

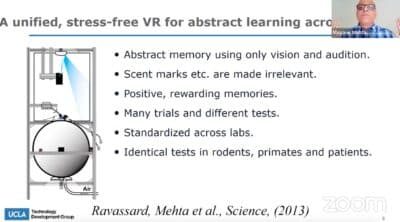

We built this virtual reality system. Now, this is a unique virtual reality system. It’s not stressful. We know that many tests for Alzheimer’s disease, such as mice swimming in a tank of cold water, they’re highly stressful. This is non-stressful. The mouse wears a little jacket, you will soon see the picture of the mouse in virtual reality. He stands on this giant floating ball that’s floating in the air. These microcontrollers and sensors pick up the movement of the ball that goes to the VR engine and goes to this tiny pico projector above his head. The room is fairly dim because these are nocturnal creatures. They don’t like very bright lights, and like us who love it, it sends the image all around the mouse, including under his feet, that’s very crucial. If you ever put on the standard VR goggles, you find a somewhat unnerving experience that you cannot see your hands or feet, which are always so used to. In this VR mouse can see its paws and can see its own shadow.

Now the mouse has to navigate in this virtual world, only using vision and maybe sound, but the smells and so on are irrelevant. If he goes to the right place in VR, he gets a little bit of soda, just like if I go to the kitchen, I get a drink of soda or water. You can do many, many trials, and we can standardize across labs. One can use humans and rodents in the same condition. Because I can bring a human being this VR, that designed in such a way that one can remove the giant ball and the whole shell sits around the patient’s head. The patient is seated comfortably in a chair. It’s a direct translation of the exact test, whether a mouse or a human, both are seated in one place, and they have to form a map of space.

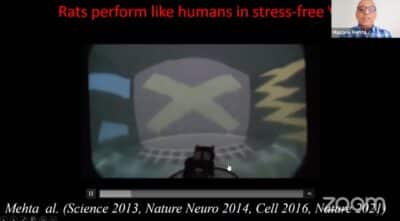

[In our VR tests with mice], we measured the neural responses in the brain from lots of neurons. We had a brilliant set of people in the lab to figure out what the neurons were doing, to make sense of the neural activity, we had to use a whole bunch of mathematics. When we did that, we found this amazing result. This is the trajectory of the rat in the real world thin blue line. And the blue dots are different neurons from the one I showed you, which is that the neuron’s activity is very precise. It fires only here and nowhere else. This is the same neuron that is now active in virtual reality, and you can see it’s spaghetti. The neuron doesn’t care at all where the mouse is. That starts to remind you of the observation I told you that in the primate brain or the humans which are walking around, there is no spatial selectivity. These neurons, mouse hippocampal neurons, don’t seem to care. That starts to match the primate.

Then we go slightly further. We look at the activity of these neurons as a function of the mouse’s head direction. This neuron, which fires maximally when the mouse is looking in this direction of the maze, doesn’t fire at all in the other direction, which tool resembles what happens in the human brain, as well as in primate brains. We conclude from this that in rodents running around in virtual reality, where only vision tells them where they are, their hippocampal responses in the hippocampal function resemble very closely a very high degree of accuracy how the human hippocampus or a primate hippocampus behaves in the real world. For mice in the real world, the ground in the stone stuff on the ground is the most powerful stimulus. When they go to VR and this remote, they start to look like humans, so we believe that the right way to test any drugs in mice.

If somebody has a brand new drug and says, “I want to know if this drug is likely to cure Alzheimer’s or not in humans, not just in rats,” you need to look at the hippocampal direction selectivity in virtual reality.

Being Patient: In this talk, you’ve spoken a lot about spatial memory. In a recent study, you looked at episodic memory, can you tell us about that?

Mehta: What we did is that to generate a purely episodic memory, we just had the mouse sit somewhere. On a giant surround screen, we played the movie, a human movie. It’s a movie that’s from the 1950s, “A Touch of Evil.” The neurons in the hippocampus started to respond based on the plot that was evolving. The partner was the Allen Brain Institute, they did the study across all kinds of structures that I showed you.

We found no matter where we look, in the visual cortex, LGN hippocampal sub-regions, those neurons that are largely encoded specific episodes of the memory, visual cortical neurons responding to specific visual cues, hippocampal neurons with chunking, those visual information in creating little bits of experience. So, that’s the episodic memory result.

Being Patient: What do we know about VR and its impact on the brain for humans?

Mehta: The answer is complex, and that’s just the way it is. There are many types of VR. There are certain kinds of VR that make people nauseous. Some people feel nauseous, some people feel dizzy, and people feel weird that they can’t see their hands and feet. We got to do it, we got to remove those things. Because even if we are experienced, it might be beneficial. These things could be causing other things while the benefits don’t show up. First thing before I answer the question of whether the VR experience is better for certain aspects of cognition or not, we will start with the kind of VR where these unwanted effects are removed. There is a fair amount of evidence that a VR experience is quite compelling at a cognitive level. This is not at the data subjective level, like if you do a VR experience that is far more memorable than an experience that you have in the real world watching television, for example.

Why is that? Is it just because of the surround screen? You can have a big giant surround screen TV, and it still won’t be as immersive as a VR experience. That’s what our research has gotten down to. The main point here is that, unlike a television that doesn’t react to every movement of your body, VR does. You can simply move your head a little bit here and there, and the scene changes like that. Now, it turns out that there is a solid relationship, a complicated relationship, a map that is stored in your body and in your brain, and this visual scene change was the result of my action.

“You can have a big giant surround screen TV,

and it still won’t be as immersive as a VR experience.”

As I started by saying that Alzheimer’s patients have a problem remembering events rather than particular sensations. Let’s do this thought experiment. Now you can simply sit back and move your head forward, and when you do that, the entire room’s image will come toward you, and you find it perfectly normal. Let’s do the opposite experiment, you’re sitting there in the entire room coming towards, in a blink of an eye, you’re going to duck, you’re going to freak out, or the opposite, then you move your head, the room doesn’t move, it’s going to the image doesn’t move.

What’s going on is what VR does is that the world, the visual experience, at least, and eventually other experiences, too, react to every little thing that is below the cognitive level. Because most of the time, we don’t know that I moved my eye to the left or to the right. Most of the time, I’m not aware of where my eyes are going. But when I move my eyes, the visual scene doesn’t zoom or move like that, even though that’s what happened to my retina. That’s what is happening with VR. That’s why this complicated dialogue between this is the result of my action, what my eyes are seeing in the room coming, the walls coming towards me is the result of my action. That’s a complicated calculation. That’s what we believe starts to get busted in these complex diseases, and that’s what VR allows you to probe in a systematic way. Because in VR, you can decouple them.

Being Patient: To sum it up, VR allows us to understand how neurons respond to different inputs, number one, and also, it helps us articulate how to make mice act more human so that we can understand the human brains and the targets that we are exploring for drug therapies for Alzheimer’s.

Mehta: Exactly. And in addition, we find that the best way to do translation is not just to rely on self-report, either human or animal, but to look inside the brain. Because whether the mouse improved or not, the improvement or lack thereof could be due to God knows how many reasons we look inside the brain and find out now you have dozens of biomarkers. We found that those dozen biomarkers in the brain in mice in virtual reality match perfectly with non-human primates as well as a handful of human studies. VR is an effective translational tool and is able to do funny things to the brain, it’s able to change brain rhythms, it’s able to shut down the activity of neurons. We need to potentially [understand] that it is a very complex tool, not just something that we should be used willy-nilly. We should be careful and say maybe this is good for me, but we don’t know right now.

It all needs to be sorted out, and the one-line summary will be that what it does to the brain is completely different than watching TV. We must be cautious, optimistically cautious, and use this carefully. I would like to send out a message to whoever might be listening: partner with us, so we can leverage this tool and avoid any potential side effects. You don’t want that to happen.

We are going to be cautious to say it’s not the same thing as watching a big screen TV. It is something else, it is reacting to our movement. At a subconscious level, it’s a new territory that our brain has entered. It’s an interesting territory. It’s a powerful tool. Let’s understand it before we take it for a ride.

This interview has been edited for length and clarity.

Katy Koop is a writer and theater artist based in Raleigh, NC.

Thank you for sharing your advice, stories & support on alzheimer’s news. I took care of my Best Friend for almost 9 yrs. while he battled, alzheimer’s. It was the toughest event in my life caring for him and what he went through.